LAS VEGAS, January 05 (AJP) -Vera Rubin - the next bar-raising AI chip platform from NVIDIA for release in the second half - is designed not as a single product, but as a full-stack platform spanning AI training, inference and deployment across large-scale systems, according to its creator Monday (local time).

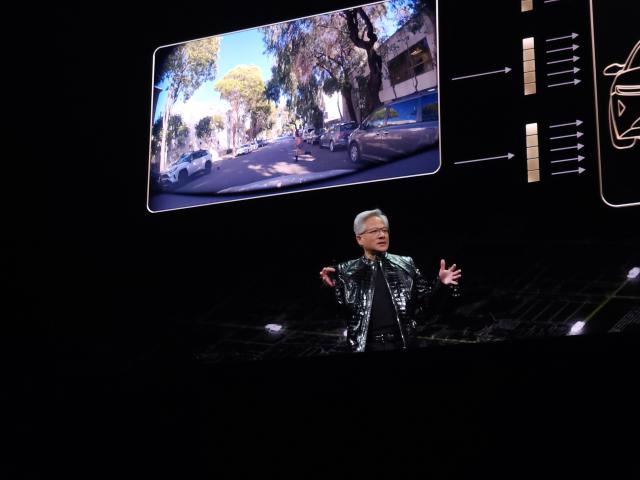

Unveiling the architecture, Jensen Huang, NVIDIA’s founder and chief executive, said Vera Rubin represents a shift toward platform-level computing aimed at powering end-to-end AI workloads, from model development to real-world deployment at scale.

Clad in his signature leather jacket, Hwang introduced the newest product at a press conference held at the BlueLive Theater inside the Fontainebleau Hotel in Las Vegas, ahead of CES 2026 opening.

Introducing the system as "One Platform for Every AI," Huang positioned Vera Rubin as a unified foundation designed to support AI training, inference and deployment at scale, rather than as a single chip or product line.

Opening his keynote, Huang placed the launch within a broader cycle of technological change. "Every 10 to 15 years, the computer industry resets," he said, pointing to past shifts from PCs to the internet, and from cloud computing to mobile platforms.

This time, he argued, the transition is more disruptive. "Two platform shifts are happening at once. Applications are being built on AI, and how we build software has fundamentally changed."

Huang stressed that AI is no longer simply another layer in computing. Instead, it is reshaping the entire stack. "You no longer program software. You train it," he said.

"You do not run it on CPUs. You run it on GPUs." Unlike traditional applications that rely on precompiled logic, AI systems now generate outputs dynamically, producing tokens and pixels in real time based on context and reasoning.

That shift, Huang said, is driving an unprecedented surge in demand for computing power. As models grow larger and reasoning becomes central to performance, both training and inference workloads have expanded sharply. "Models are growing by an order of magnitude every year," he said, noting that test-time scaling has turned inference into a thinking process rather than a single response. "All of this is a computing problem. The faster you compute, the faster you reach the next frontier."

Vera Rubin is NVIDIA’s response to that challenge. Named after astronomer Vera Florence Cooper Rubin, the platform is built through what NVIDIA calls extreme codesign, integrating six newly developed chips — including the Vera CPU and Rubin GPU — into a single system. According to NVIDIA, this approach enables large mixture-of-experts models to be trained with roughly 4x fewer GPUs and reduces inference token costs by up to 10x compared with the Blackwell platform.

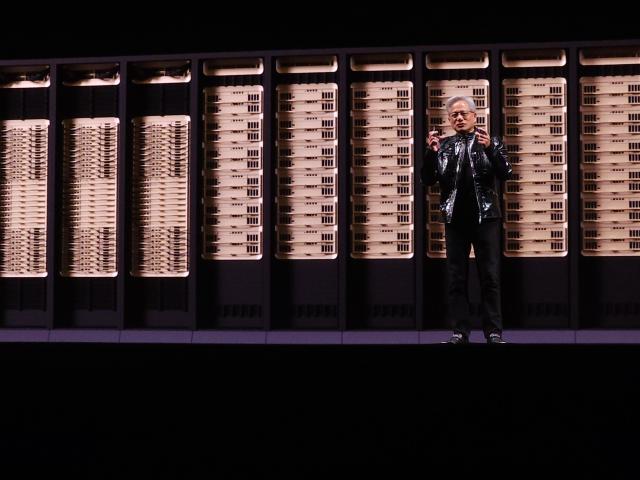

Huang highlighted the platform’s rack-scale architecture as a key departure from earlier designs. The Vera Rubin NVL72 system connects 72 GPUs using sixth-generation NVLink technology, creating internal bandwidth at a scale rarely seen in data centers. "The rack provides more bandwidth than the entire internet," Huang said, underscoring how data movement has become a limiting factor for modern AI systems.

Memory was another issue Huang addressed directly. As AI models move toward multi-step reasoning and longer interactions, the amount of context they must retain has outgrown on-chip memory. Vera Rubin introduces a new inference context memory platform powered by BlueField-4 processors, allowing large volumes of key-value cache data to be stored and shared locally. Huang said the goal is to reduce network congestion while maintaining consistent inference performance at scale.

Energy efficiency and system reliability were also emphasized. Despite significantly higher compute density, Huang said Vera Rubin systems are cooled using hot water at about 45 degrees Celsius, eliminating the need for energy-intensive chillers. The platform also introduces rack-scale confidential computing, encrypting data across CPUs, GPUs and interconnects to protect proprietary models during training and inference.

Throughout the keynote, Huang repeatedly framed NVIDIA’s role as extending beyond chip design.

"AI is a full stack," he said. "We are reinventing everything, from chips to infrastructure to models to applications." He added that this approach is intended to support what he described as the next phase of AI deployment, built around large-scale AI factories operated by cloud providers, enterprises and AI labs.

NVIDIA said Rubin-based products will be rolled out through partners beginning in the second half of 2026, with cloud providers, AI labs and enterprise customers expected to be among the early adopters.

Copyright ⓒ Aju Press All rights reserved.

![[CES 2026] Tactile technology seeks to preserve Japans endangered craft skills](https://image.ajunews.com/content/image/2026/01/09/20260109081719899487_278_163.jpg)

![[CES 2026] Seoul startups vie for attention at CES, powered by student talent](https://image.ajunews.com/content/image/2026/01/08/20260108153955802448_278_163.jpg)