SEOUL, January 28 (AJP) - Samsung Electronics and SK hynix are set to begin mass production of sixth-generation high-bandwidth memory (HBM4) as early as next month, intensifying competition in the fast-growing market for artificial intelligence semiconductors.

According to industry sources on Wednesday, the two South Korean companies plan to start HBM4 mass production in February, with Samsung operating lines at its Pyeongtaek campus and SK hynix at its Icheon facility.

The move signals that quality validation by key customers, including Nvidia, is nearing completion and that large-volume supply orders are imminent.

Analysts say the company that ramps up production first could secure an early advantage in the next phase of the AI chip race.

Samsung is seeking to regain technological leadership by moving early with HBM4. The company has recently passed final HBM4 quality tests conducted by Nvidia and AMD and is preparing to begin formal deliveries next month. Samsung lost ground to SK hynix in earlier generations such as HBM3 and HBM3E but has expressed confidence in its technological edge for HBM4.

Samsung’s HBM4 uses a 4-nanometer foundry process for the logic die and sixth-generation 10-nanometer-class DRAM, a combination designed to maximize processing speed and power efficiency.

SK hynix, meanwhile, is aiming to defend its market lead by deepening ties with major technology firms beyond Nvidia. The company has been confirmed as the sole supplier of HBM3E for Microsoft’s in-house AI accelerator, the Maia 200, unveiled on Jan. 26. The chip uses six stacks of SK hynix’s 12-layer HBM3E products.

The development underscores SK hynix’s expanding role not only in Nvidia’s supply chain but also among large technology companies such as Google, Amazon and Microsoft.

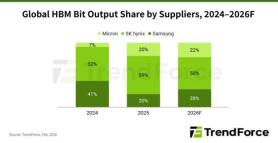

SK hynix has said it expects to maintain more than a 50 percent share of the HBM4 market, citing cooperation with Nvidia from the development stage of its next-generation Rubin graphics processing unit.

HBM4 is widely seen as a potential game-changer for AI accelerators from 2026, as it offers roughly double the bandwidth of current-generation products, significantly boosting AI computing performance. Bank of America forecasts the global HBM market will reach $54.6 billion this year, up 58 percent from a year earlier.

* This article, published by Aju Business Daily, was translated by AI and edited by AJP.

Copyright ⓒ Aju Press All rights reserved.