SEOUL, February 08 (AJP) - Samsung Electronics is set to begin mass shipments of the the sixth generation high bandwidth memory chips dubbed HBM4 later this month, according to industry sources Sunday.

The chips will be rolled out from the third week of February after the Lunar New Year holiday break, thrusting the HBM laggard into the front tier as HMB4 is expected to become the standard from next-generation AI chips.

The shipment schedule has been finalized to align with the production of Nvidia's next-generation AI accelerator, known as Vera Rubin.

Samsung has reportedly completed quality verification and received purchase orders, with the latest order including a significant increase in sample volumes for final module testing.

Kim Jae-jun, vice president of Samsung's memory business, confirmed the production timeline during a fourth-quarter earnings call last month.

He stated that mass production of HBM4 chips with speeds of 11.7Gbps was already underway for scheduled delivery in February. Nvidia is expected to officially unveil the Vera Rubin platform featuring Samsung's HBM4 at the GTC 2026 conference next month.

The HBM4 chips feature specifications that exceed current industry standards. While the JEDEC standard is set at 8Gbps, Samsung's product achieves transfer speeds of 11.7Gbps, a 22 percent improvement over the previous HBM3E generation.

The memory bandwidth per single stack has increased to 3 terabytes per second, while a 12-stack configuration provides a 36GB capacity. The company plans to expand this to 48GB using 16-stack technology in the future.

To achieve these performance gains, Samsung integrated its 10-nanometer-class sixth-generation DRAM with its 4-nanometer foundry process. The company is promoting a one-stop solution that combines logic, memory, foundry, and packaging to streamline production and improve energy efficiency, which is expected to lower power consumption and cooling costs in data centers.

The move comes as competition for the AI memory market intensifies. Samsung expects its HBM shipment volume to more than triple this year compared to last year and is currently building a new production line at its Pyeongtaek Campus.

Its local peer and HBM leader, SK hynix, is ramping up its HBM4 capacity to meet potential additional demand from Nvidia.

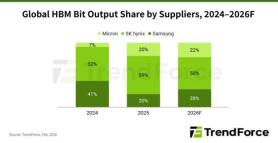

With Micron currently trailing in the supply chain competition, analysts expect Samsung and SK hynix to split the majority of HBM orders for the Vera Rubin chips.

Copyright ⓒ Aju Press All rights reserved.